AI Writing Assistant in Research: Where Is the Ethical Boundary?

Ryan McCarroll

Jan 22, 2026

2 min read

AI Writing Assistant in Research: Where Is the Ethical Boundary?

AI writing assistants are now everywhere. Many students and researchers use tools like AnswerThis, ChatGPT, Grammarly, and others. These tools can rewrite sentences, fix grammar, summarize papers, and even help generate ideas. For some people, AI makes writing faster and less stressful. For others, it raises a serious question.

Where is the ethical boundary?

This question matters more than ever. Journals, universities, and research labs are still updating their rules. Some allow AI with transparency. Others restrict it. And many researchers feel confused. They want to use AI ethically, but they also want to avoid breaking academic integrity rules.

In this blog, we will explore what is ethical, what is risky, and how you can use AI writing tools the right way.

Why Researchers Use AI Writing Assistants

Research writing is hard. Even experienced researchers struggle with writing clearly. Many researchers also write in a second language. Others feel overwhelmed by deadlines, formatting rules, and peer review.

This is why AI writing assistants feel like a gift. They can help with grammar. They can improve sentence flow. They can suggest better academic tone. They can reduce repetition. They can also help create outlines, research questions, and structure.

But writing is not just typing words. Research writing is linked to thinking. It reflects your understanding, your logic, and your contribution. That is why ethical boundaries matter.

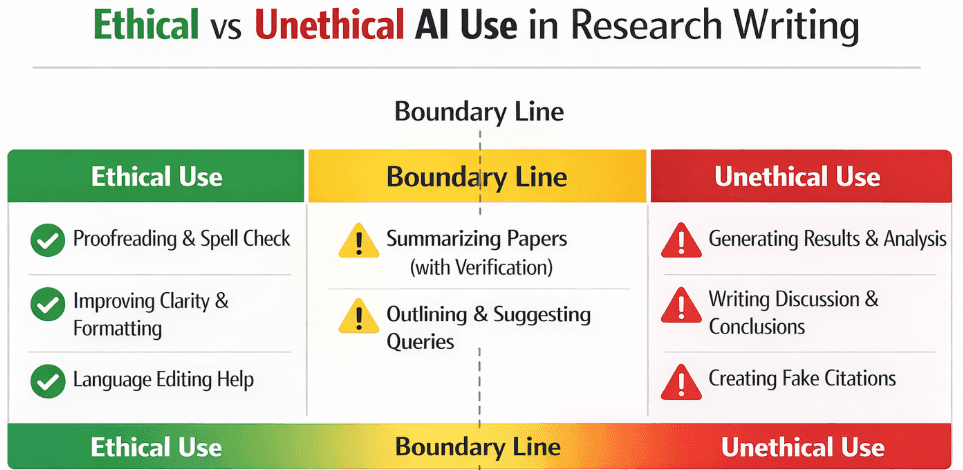

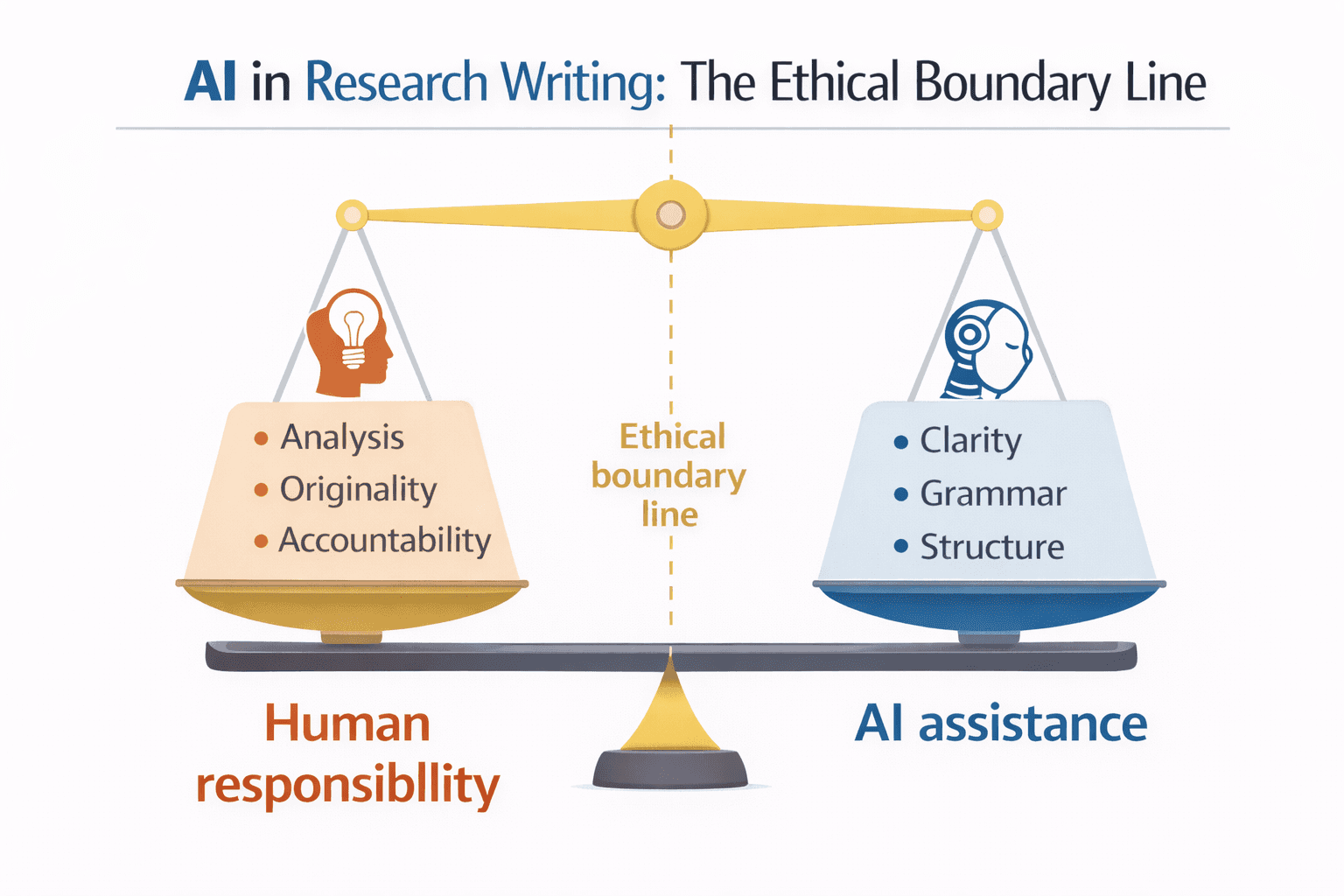

The Ethical Boundary: A Simple Definition

The ethical boundary is crossed when AI changes the research from being your work to being AI’s work.

A helpful way to think about it is this:

If AI is helping you communicate your ideas better, it is usually ethical.

If AI is creating your ideas, arguments, analysis, or findings, it becomes unethical.

In research, the most important things must come from the researcher. This includes the hypothesis, reasoning, interpretation, novelty, and accountability.

You are the author. So you must stay responsible.

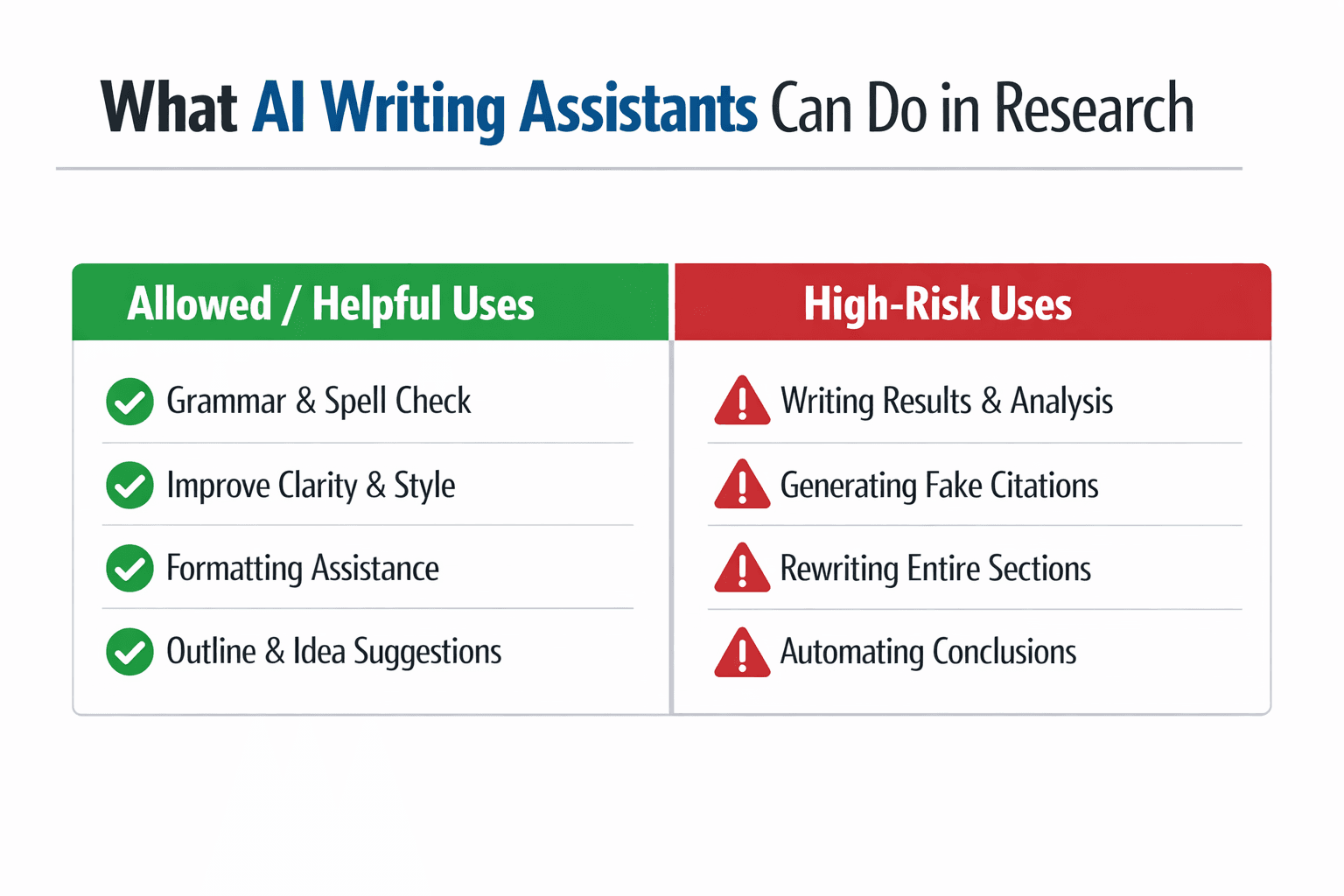

What Counts as Ethical Use of AI in Research Writing?

Ethical AI use is usually focused on improving language and readability. Many journal publishers allow this type of use, as long as the human authors stay accountable.

Here are examples of generally ethical usage:

You write a paragraph, then ask AI to improve clarity and grammar.

You ask AI to reduce wordiness and improve academic tone.

You use AI to check spelling, sentence structure, and coherence.

You use AI to help create an outline before writing.

You use AI to summarize your own draft and suggest improvements.

Many researchers use AI for these tasks, especially non-native English speakers. This can improve fairness and communication.

Some publishers have openly stated that AI tools can be used for language editing, but the authors remain responsible for the content.

For example:

Nature states that large language models should not be listed as authors and authors must take full responsibility.

https://www.nature.com/articles/d41586-023-00191-1ICMJE authorship criteria emphasize accountability and contribution, which AI cannot meet.

https://www.icmje.org/recommendations/

What Is Unethical or High-Risk Use?

Unethical use happens when AI is doing intellectual work that should be done by the researcher. This includes analysis, interpretation, and producing original academic arguments without proper contribution.

These are high-risk uses:

If AI writes your entire introduction, literature review, or discussion.

If AI generates interpretations of your results without your own analysis.

If AI produces methodology descriptions that you did not actually follow.

If you copy AI text directly into your paper without editing or understanding.

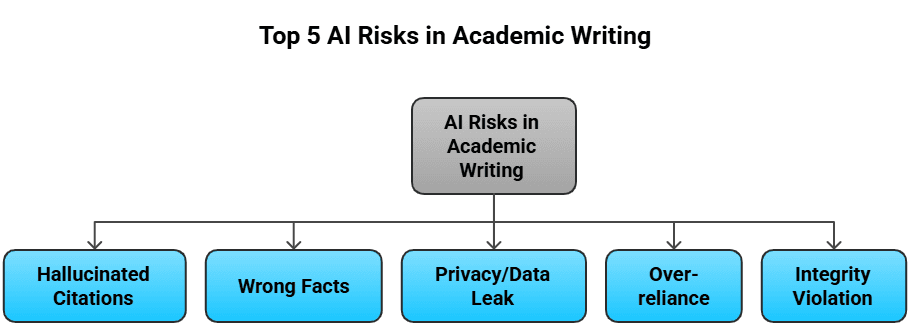

If AI generates citations and you include them without checking.

This last point is extremely important. AI tools can confidently produce citations that do not exist. This is often called hallucination, and it can destroy credibility.

A major concern in research is that AI-generated content can look fluent and academic while being wrong. This risk has been discussed widely in academic publishing.

For example:

A key paper explains how LLMs can generate convincing but incorrect text:

Bender et al., 2021, On the Dangers of Stochastic Parrots.

https://dl.acm.org/doi/10.1145/3442188.3445922

AnswerThis (AI Writing Assistant Built for Research Workflows)

AnswerThis is a bit different from a general AI text editor, because it’s designed around research evidence, not just rewriting. Instead of only polishing sentences, it helps you generate structured literature reviews, keep citations attached to claims, and move from reading papers to writing with sources much faster. That matters ethically, because one of the biggest risks with AI writing tools in research is producing confident sounding text that is not properly grounded in real papers.

In practice, AnswerThis works best when you treat it like a research companion rather than a ghostwriter. You can use it to build a literature review draft with citations, collect key ideas inside a Notebook, and then paraphrase or refine sections while keeping your sources visible. This makes it easier to stay accountable for what you’re saying, because you’re not relying on AI to invent arguments, you’re using it to organise, rewrite, and communicate research you can actually verify.

Pricing:

AnswerThis has free AI writing tools, however you will be limited to 5 credits.

AnswerThis Pro has a monthly subscription plan at $30/month or $144/year, with a free plan available to try the core workflow before upgrading. You can also invite your team to research alongside you with our teams plan.

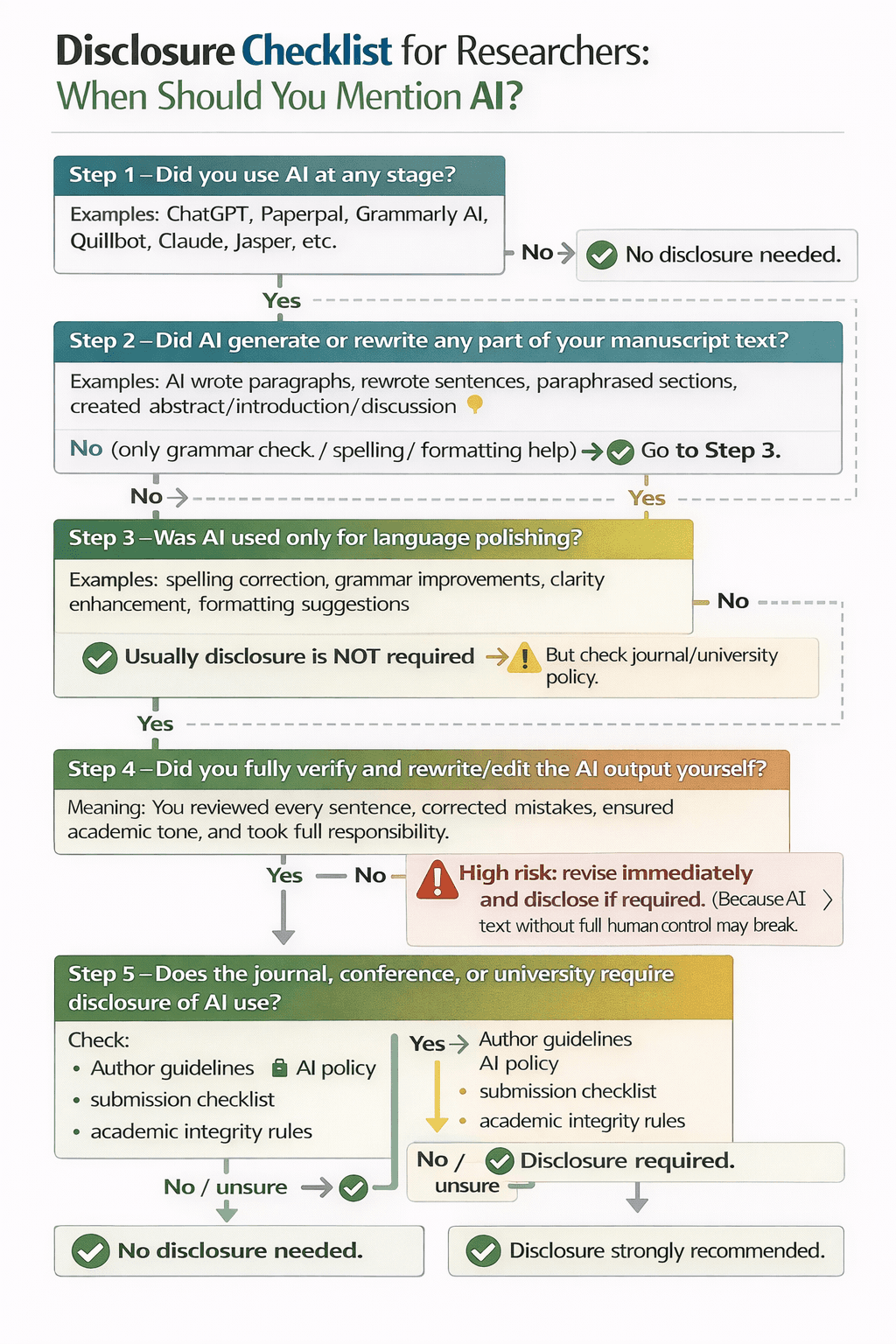

Transparency: Do You Need to Disclose AI Use?

This depends on where you are submitting.

Some journals now require disclosure if AI tools were used, especially if they supported writing. Some universities also require students to disclose the use of generative AI.

For example:

Elsevier has guidance around AI usage and disclosure.

https://www.elsevier.com/about/policies-and-standards/publishing-ethicsSpringer Nature also provides policies related to AI tools.

https://www.springernature.com/gp/editorial-policies/ai

Even when disclosure is not required, transparency is a strong ethical habit. It builds trust. It protects you if your work is questioned later.

A simple disclosure sentence can be enough, for example:“This manuscript was language-edited using an AI writing assistant. The authors take full responsibility for the content.”

The Biggest Ethical Risks Researchers Should Know

The biggest risks are not only plagiarism. There are deeper issues.

One risk is loss of authorship authenticity. When AI writes too much, your writing voice disappears. This can also affect your learning, especially for early stage PhD students.

Another risk is hidden errors. AI may simplify technical meanings and change accuracy. It can also introduce wrong claims.

A third risk is data privacy. Researchers sometimes paste unpublished text, sensitive patient data, or confidential results into AI tools. This can violate ethics protocols and institutional policies.

If you work with sensitive data, you should follow privacy rules strictly. Always check institutional policies before using any AI system.

A Practical Ethical Rule for Researchers

Here is a strong ethical rule that works in most universities and journals:

AI can help you write better. But AI should not think for you.

So you can use AI to improve expression.

But not to create intellectual work.

To make it even simpler, use this test:

If a supervisor asks you:

“Explain why you wrote this sentence this way.”

You must be able to answer confidently.

If you cannot explain it, then AI has crossed the boundary.

The Future: AI Is Not Going Away

Many researchers worry about AI detectors. But this is not the real issue. The real issue is research integrity. Most researchers want to make sure that their text has their own human touch, and will go as far to use humanize ai generated text.

Journals are not banning AI completely. Instead, they are focusing on transparency, responsibility, and ethical use. That means researchers should learn AI literacy. Not fear it.

The future belongs to researchers who can use AI as a support tool while maintaining human accountability.

AI should become a research assistant, not a ghostwriter. You shouldn't just prompt and paste.

Access to expert advice, trusted sources, and free AI writing tools can help researchers enhance their writing skills and make the most of collaborative writing assistant solutions.

Final Thoughts

AI writing assistants can be useful and ethical. They can help researchers write clearly and confidently. They can reduce language barriers. They can make writing faster.

But the ethical boundary must be respected.

Use AI for clarity, not creativity of findings.

Use AI for editing, not for intellectual contribution.

Never use fake AI citations.

Always verify facts.

And follow journal or university policies.

If used wisely, AI will not damage research integrity. It will improve research communication.